Home

amazon-orders for Python

amazon-orders is an unofficial library that provides a command line interface alongside a programmatic API that can be used to interact with Amazon.com’s consumer-facing website. This works by parsing website data from Amazon.com. A nightly build validates functionality to ensure its stability, but as Amazon provides no official API to use, this package may break at …

java-ngrok – a Java wrapper for ngrok

java-ngrok is a Java wrapper for ngrok that manages its own binary, making ngrok available via a convenient Java API. ngrok is a reverse proxy tool that opens secure tunnels from public URLs to localhost, perfect for exposing local web servers, building webhook integrations, enabling SSH access, testing chatbots, demoing from your own machine, and more, and its made even more …

hookee – command line webhooks, on demand

hookee is a utility that provides command line webhooks, on demand! Dump useful request data to the console, process requests and responses, customize response data, and configure hookee and its routes further in any number of ways through custom plugins. Installation hookee is available on PyPI and can be installed using pip: pip install hookee or conda: conda install -c conda-forge hookee That’s …

pyngrok – a Python wrapper for ngrok

pyngrok is a Python wrapper for ngrok that manages its own binary and puts it on your path, making ngrok readily available from anywhere on the command line and via a convenient Python API. ngrok is a reverse proxy tool that opens secure tunnels from public URLs to localhost, perfect for exposing local web servers, building webhook integrations, enabling SSH access, …

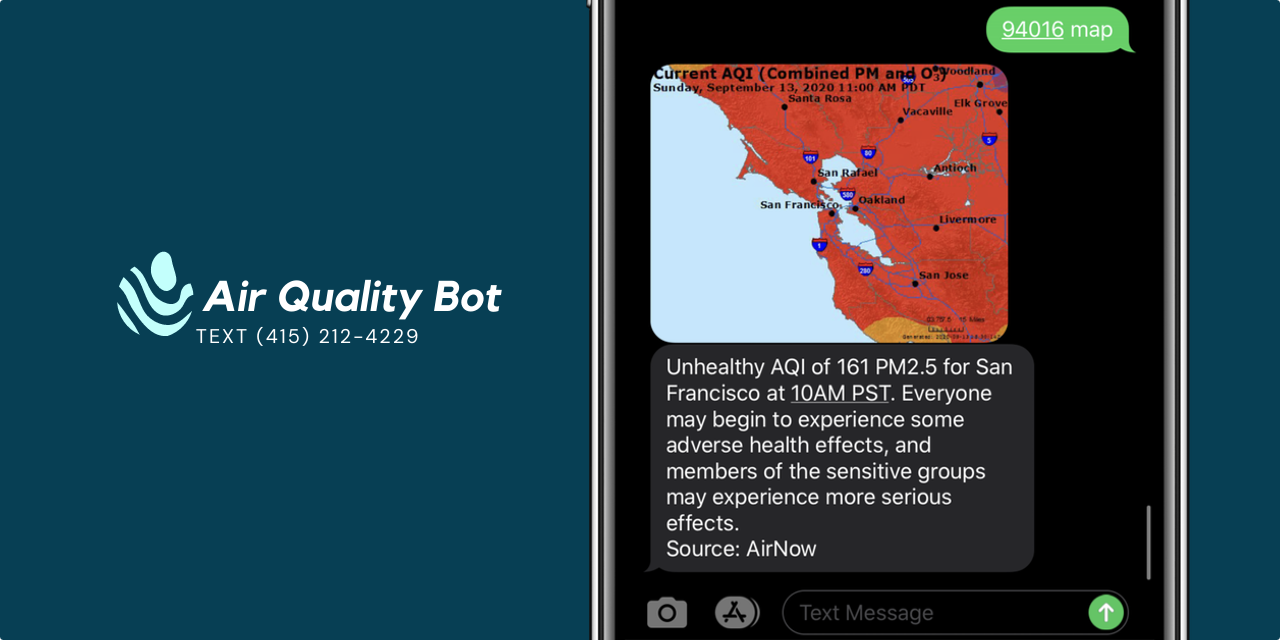

Twilio-Powered Air Quality Texting Service

With wildfire season upon us, use this handy texting tool to find out what the air quality is in your area. Simply text your zip code to (415) 212-4229 for air quality updates. You can also add “map” to the text to be sent an image of your region. This service isn’t just useful for individuals with …

Alex and Jess Are Raising Our Future

“Talk is cheap.” That’s what we say. And, to a degree, it’s true. But bear this in mind: all action is precipitated by talk. People will often try to silence your voice expressly for that reason — because they know it will lead to action. In the age where hating on millenials is trendy, dismissing the value …

Django Dropzone Uploader

Ever been on a trip and, upon return, needed a quick and easy way for all your friends to send you their pictures and videos without burning CDs, sending massive emails, or using third-party services? Or, maybe a better question, ever wondered how to construct a basic Django application with Amazon’s web services, for instance …

DD-WRT NAT Loopback Issue

NAT loopback is what your router performs when you try to access your external IP address from within your LAN. For instance, say your router forwards port 80 to a web server on your LAN. From an outside network, you could simply visit your external IP address from a browser to access the web server. …